Behind the Scenes with Doodler

Sharon Fitzpatrick and Jaycee Favela

Coast Train DevelopersGet a behind the scenes look into the team working with Doodler, the workflow, Doodler's evolution and much more!

Get a behind the scenes look into the team working with Doodler, the workflow, Doodler's evolution and much more!

Just a quick note about changes in 08/28/21. v 1.2.6

npz files now use compression, so they will be smaller

doodler by default now uses threading, so it should be faster

You can disable this by editing the environment/settings.py and setting THREADING to False

new variable 'orig_image' written to npz, as well as 'image'

they are identical except for occasions where converting imagery to floats creates a 4th band, to accommodate a nodata band in the input. so 'orig image ' and 'image' would have different uses for segmentation and are therefore both preserved. orig_image is now the one written to npz files for zoo

new function gen_images_and_labels_4_zoo.py, which creates greyscale jpegs and 3-band color images (orig images) and writes them to the results folder

updated the other utility functions to accommodate the new variable orig_images, which now takes priority over the variable images

website and README updated with details about all of the utility scripts

Tutorial: How to change doodles shapes in doodler.

Tutorial: How to use the erase tool in doodler.

Tutorial: How to use the zoom and pan tools in doodler.

Existing users of Doodler should be aware of the major changes in version 1.2.1, posted May 10. There are a lot of them, and they mean you will use Doodler a little differently going forward. I hope you like the changes!

First, Doodler has a new documentation website (you know this because you are here).

There are so many new features in this release they are organized by theme ...

Model independence factor replaces the previous "color class tolerance" parameter (the mu parameter in the CRF). Higher numbers allow the model to have greater ability to 'undo' class assignments from the RF model. Typically, you want to trust the RF outputs and want to keep this number small. Blur factor replaces the previous "Blurring parameter" (the theta parameter in the CRF). Larger values means more smoothing.Blur factor, then Model independence factor , then downsample and finally probability of doodle. These are in the order of likelihood that you will need to tweak.predict_folder.py, the user decides between two modes, saving either default basic outputs (final output label) or the full stack out outputs for debugging or optimizingpredict_folder, extracted features are memory mapped to save RAMutils/plot_label_generation.py is a new script that plots all the minutae of the steps involved in label generation, making plots and large npz files containing lots of variables I will explain later. By default each image is modeled with its own random forest. Uncomment "#do_sim = True" to run in 'chain simulation mode', where the model is updated in a chain, simulating what Doodler does.utils/convert_annotations2npz.py is a new script that will convert annotation label images and associated images (created and used respectively by/during a previous incarnation of Doodler)utils/gen_npz_4_zoo.py is a new script that will strip just the image and one-hot encoded label stack image for model training with ZooThis is a simple utility that allows you to visualize the contents of npz files

From the main Doodler folder ...

The syntax is this:

-t (input npz file type)`

labelgennpz_zoowhich is equivalent to

plot_label_generation#gen_npz4zoo (i.e. the inputs to segmentation Zoo)#Doodler works well with small to medium sized imagery where the features and objects can be labeled without much or any zoom or pan. This depends a lot on the image resolution and content so it is difficult to make general guidelines.

But it's easy enough to chop images into pieces, so you should experiment with a few different image sizes.

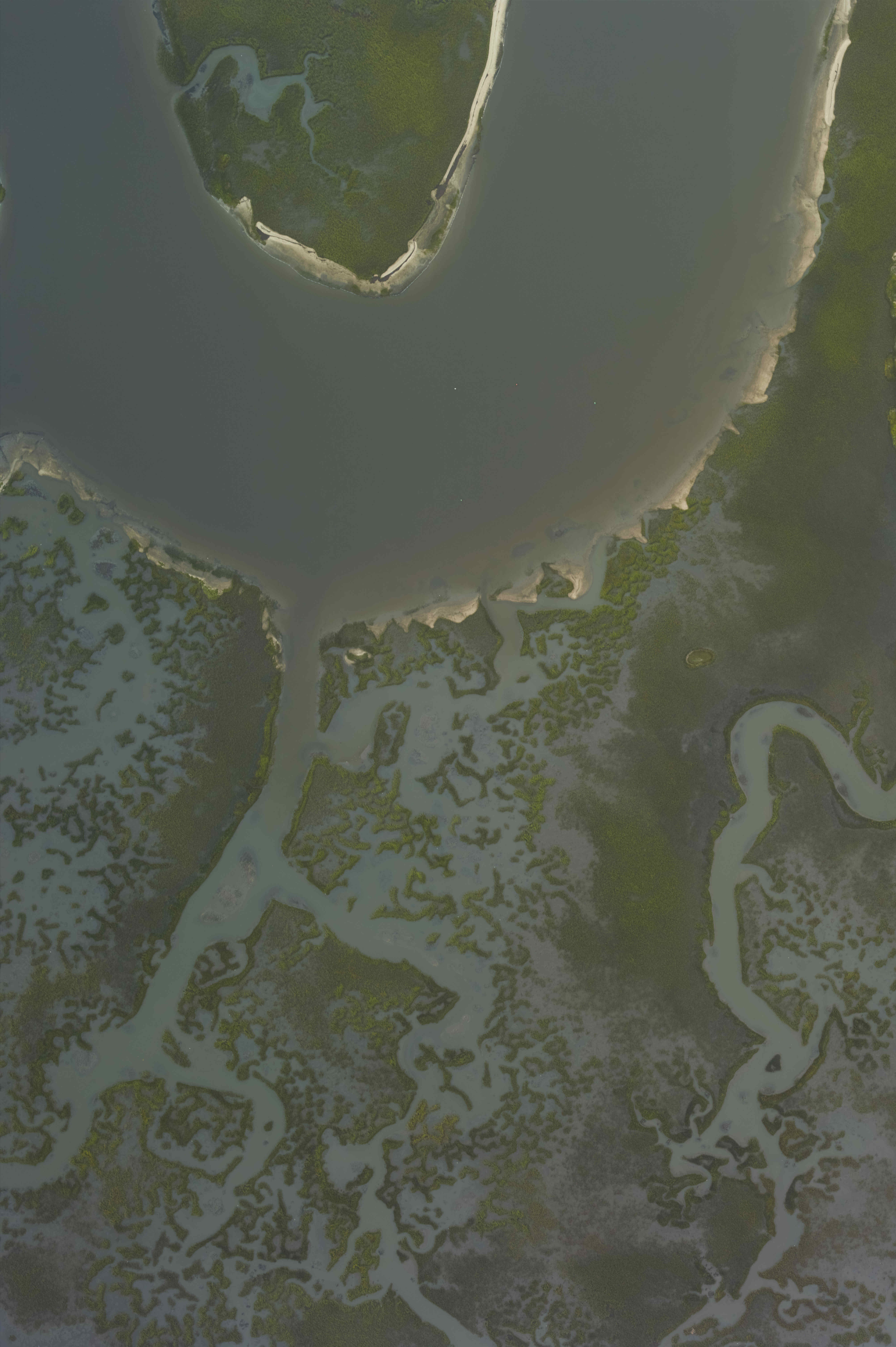

Let's start with this image called big.jpg:

I recommend the command-line program imagemagick, available for all major platforms. It's an incredibly powerful and useful set of tools for manipulating images. You can use the imagemagick command line tools for splitting and merging imagery. We use the magick command (convert on some Linux distributions)

Split into two lengthways:

Following the same logic, to chop the image into quarters, use:

The first two quarters are shown below:

To chop the image into tiles of a specific size, for example 1024x1024 pixels, use:

The first three tiles are shown below:

Easy peasy!

After you've labeled, you may want to recombine your label image. Imagemagick includes the montage tool that is handy for the task. For example, the image quarters can be recombined like this:

and the equivalent command to combine the two vertical halves is:

Happy image cropping!

Doodler can work with really large images, but it is usually best to keep your images < 10,000 pixels in any dimension, because then the program will do CRF inference on the whole image at once rather than in chunks. This usually results in better image segmentations that are more consistent with your doodles.

So, this post is all about how you make smaller image tiles from a very large geoTIFF format orthomosaic, using python. The smaller tiles will also be written out as image tiles, with their relative position in the larger image described in the file name, for easy reassembly

We'll need a dependency not included in the doodler environment: gdal

conda install gdal

Now, in python:

How large do you want your output (square) image tiles to be? (in pixels)

What images would you like to chop up?

List the widths and heights of those input bigfiles

Specify a new folder for each set of image tiles (one per big image)

Make file name prefixes by borrowing the folder name:

Finally, loop through each file, chop it into chunks using gdal_translate, called by an os.system() command. Then moves the tiles into their respective folders

Doodler can use 1, 3, and 4-band input imagery. If the imagery is 3-band, it is assumed to be RGB and is, by default, augmented with 3 additional derivative bands.

But how do you make a 4-band image from a 3-band image and a 1-band image?

That additional 1-band might be that acquired with an additional sensor, but might more commonly be a DEM (Digital Elevation Model) corresponding to the scene.

I know of two ways. If you have gdal binaries installed, first strip the image into its component bands using gdal_translate

Then merge them together using gdal_merge.py

The equivalent in python can be acheived without the gdal bindings, using the libraries already in your doodler conda environment

First, import libraries

Read RGB image

Read elevation and get just the first band (if this is 3-band)

If you had a 1-band elevation image, it would be this instead...

Merge bands - creates a numpy array with 4 channels

Write the image to file

You can use the following to read it back in

And verify with 'shape' - it should be 4 bands